The new architecture of elibom.com

In a few days we will launch a new version of elibom.com, an SMS Gateway for Latin America that I co-founded a while ago1. From banks notifying unusual transactions to small businesses reminding appointments, we currently have hundreds of customers using our web application and sending millions of messages per month.

In this post I’ll explain the technical details behind the new version, which is currently hosted at beta.elibom.com.

Architecture

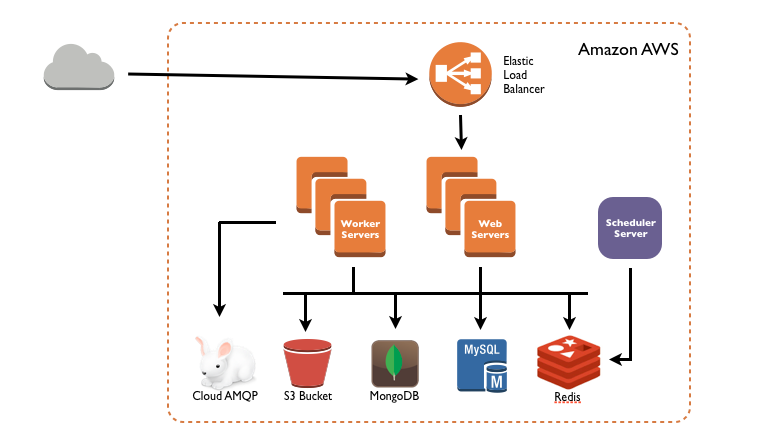

The following diagram shows the main components of the new architecture:

Modules

The application is divided in three modules, web, worker and scheduler. Web modules handle HTTP requests, worker modules execute background jobs and the scheduler module triggers scheduled text messages and periodic tasks (e.g. to generate statistics or clean data). Notice that there are no hard dependencies between them.

Web and worker modules are deployed on multiple EC2 instances (m1.small) while the scheduler module is deployed on a single [free] Heroku dyno. The reason to use Heroku -for the scheduler instance- is that, besides being free, it will monitor and restart the process if it fails.

Notice that there is only one instance of the scheduler module. There is a reason for that. We can’t have more than one instance or unexpected things will happen (e.g scheduled messages could be triggered multiple times). Besides, the scheduler module doesn’t need to scale because it does little work (albeit an important one).

So, why not having everything on Heroku? Well, it turned out that Heroku dynos were too small for our web and worker processes2. Also, we experienced some latency to other services. I don’t know exactly why, Heroku is supposed to deploy all the instances on us-east-1 but you can’t really tell. Anyway, that’s still an option we are considering.

External services

An Amazon RDS for MySQL (Multi-AZ) acts as our main database. We also use MongoDB (powered by MongoLab) to store things that doesn’t quite fit the relational model. Redis (powered by OpenRedis) is used to store session information, for inter-module communication and to store volatile data.

We use Amazon S3 to store files and assets.

Although not shown in the diagram, there is an external service called Mokai that we use to actually deliver text messages to mobile operators. The communication between our web application and Mokai is done through Cloud AMPQ, a messaging solution built on top of RabbitMQ.

Technologies

Front End

Our front end is built using HTML5, CSS3 and Javascript. CSS is generated using LESS. Twitter Bootstrap is used for the layout and for some visual components.

Backbone, Underscore and JQuery are the Javascript libraries we use the most. We deliberately decided not to build a one-page application so we only use a part of Backbone: the views, and occasionally models and collections.

Back End

The MVC framework we use is Jogger, which brings the best ideas of other frameworks such as Sinatra, Flask, and Express.js to the Java language. The views are generated using Freemarker, which is a templating engine for Java.

We use Hibernate as the ORM and Hazelcast as the Second Level Cache3. Spring Framework glues everything together.

To execute background jobs we use Jesque, a port of Resque to the Java language, which is a Redis-backed library for enqueuing and processing background jobs. The web and scheduler modules enqueue jobs that the worker module dequeues and executes asynchronously.

Deployment and monitoring

Our deployment process is not as automated as I would want it to be, but that’s something we are working on. We are using Cloudbees as our CI server but it doesn’t publish the artifacts after a successful push. We have to do it manually in two steps.

First, we have to create the distribution files using an instance on EC2 with a script that pulls from our Github repository, builds the Maven project and publish the artifacts to S3.

Then, to actually deploy the changes we have to launch new EC2 instances passing a User Data script that gets executed when the instance starts. The script downloads the distribution file, uncompresses it and starts the application. There are two User Data scripts: one for the web instances and the other for worker instances. Finally, we manually add the new web instances to the load balancer.

Yeah, lots of improvements to do. However, it allow us to continuously deploy with zero downtime.

Deploying to Heroku is a lot easier, we just push the code to Heroku’s git repo and we are done! Our tests showed that it does have some downtime but because we only have the scheduler module there, it’s not a real issue.

Finally, we are using New Relic to monitor our instances, which is expensive but incredibly powerful.

I hope this post has given you valuable information for your current or future projects. The idea was to give you an overview of what is possible and what technologies enable those possibilities. If you have questions or want me to expand on a specific topic just let me know, I’ll try to do my best.